The community engagement project challenges students to create a professional development session to be presented at a conference of the student’s choosing. As part of building effective digital age environments, as prescribed by the ISTE Standards for Coaches #3, I chose to create an interactive session that focused on active learning and digital collaboration tools to improve current practices in nutrition education. Technology in nutrition education currently has limited uses but impactful potential. Despite the fact that nutrition information is plentiful in the digital world, the approach of dietitians and nutritionists has been to increase presence through blogs, social media, and videos (such as those on YouTube), while the Academy of Nutrition and Dietetics (AND), the representative organization for all dietitians, set their efforts to instill a code of ethics and provide information on privacy in the digital workplace. These efforts may help mitigate nutrition misinformation but are often one-sided or engage only limited populations. For example, blogs may allow comments but do not allow for active engagement with the blog topics nor takes into account implementation on a local level. Social media platforms such as Facebook, Pinterest, and Twitter allow for nutritionists’ voices to be heard but rarely offer collaborative engagement between other experts, or communities. The solution is relatively simple as the digital tools mentioned offered plenty room for continued collaboration among participants at any level, (local or global).

The Academy itself recognizes the potential of technology in nutrition and has published a practice paper on nutrition informatics. Nutrition informatics is a relatively new field in dietetics that addresses technology’s role in health practices. The Academy discusses the potential pros and cons for each of the various practice fields in dietetics (clinical, food services, education/research, community, consultation/business) and technology’s potential for growth in each of those areas. In education specifically, the Academy recognizes use in distance learning, student progress tracking, speciality testing for licensing and certification, and professional course development. However, it does not mention need for collaboration or engaging various audiences requiring nutrition education.

In order to bridge this gap and address the ISTE Coaching Standard, the topic for this professional development proposal focuses on building better nutrition education through digital collaboration tools. The goal of this session is to explore benefits of active learning through technology aides (EdTech) and implement tools into existing lesson plans with the following objectives in mind:

- a) Understand and/or review importance of active learning (evidence-based practice)

- b) Become familiarized with collaborative edtech tools

- c) Engage with edtech tool selection criteria and best practices

- d) Explore ways to incorporate digital tools in lesson plan scenarios.

Professional Development Session Elements

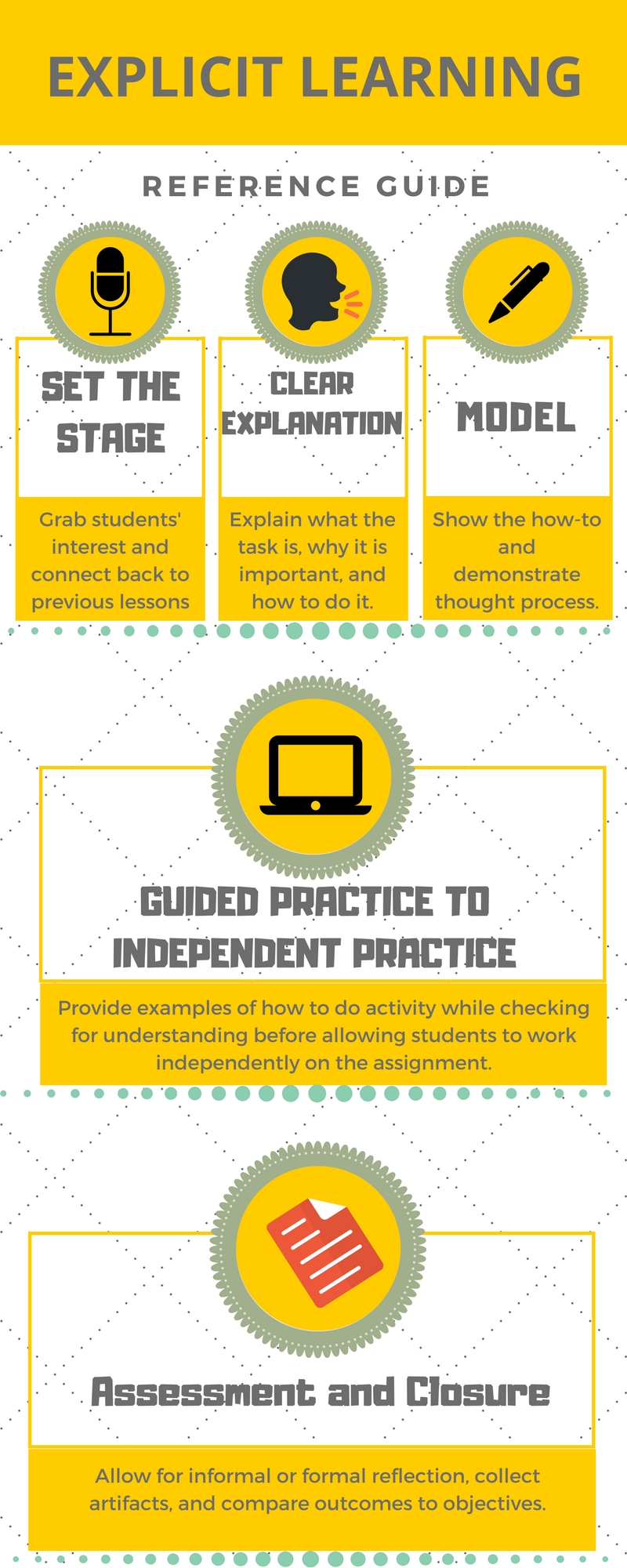

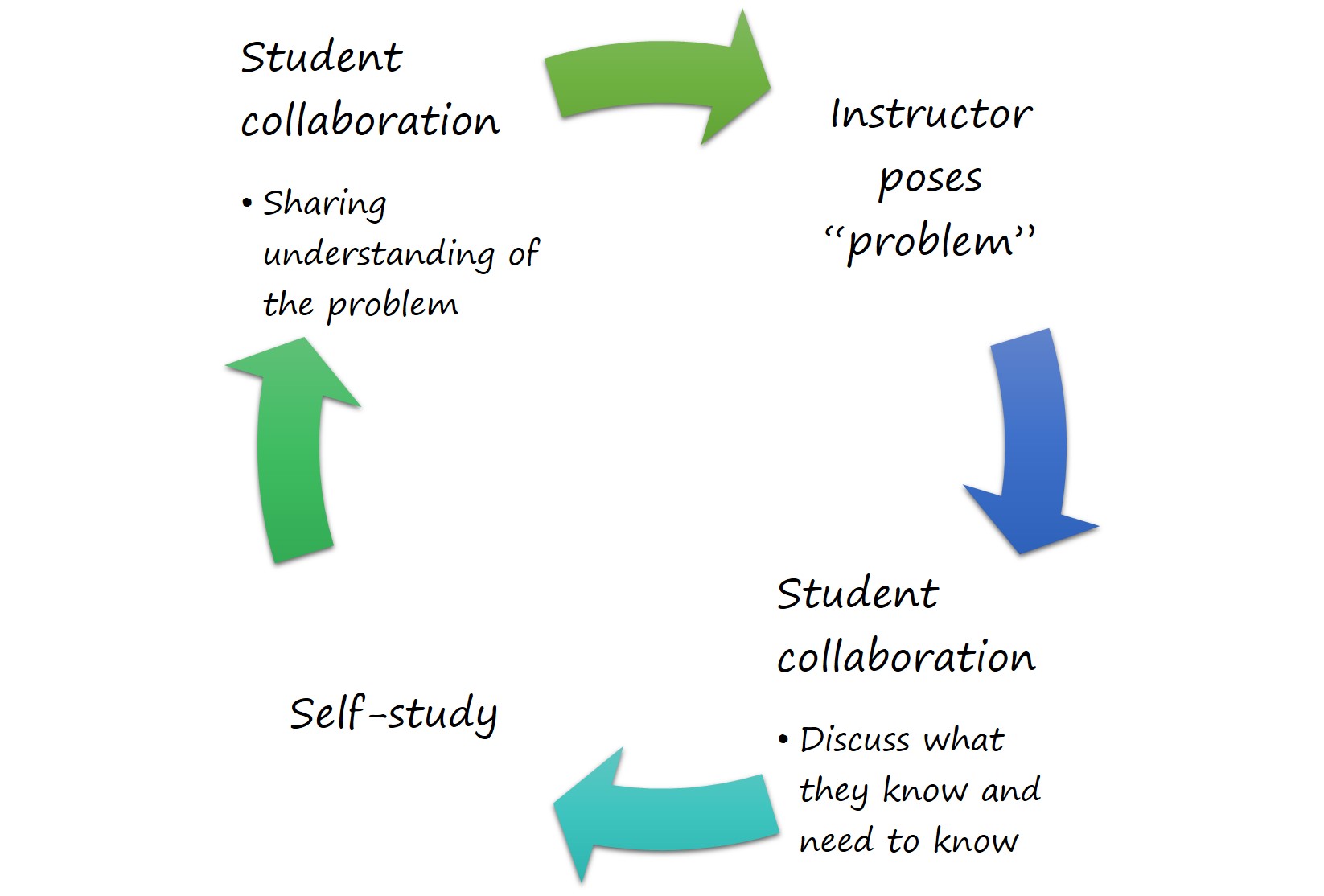

In this one-hour session, participants will be invited to explore the main topic through both face-to-face and online collaboration, as the entire group navigates through a website developed specifically for the presentation. Since all of major content is available to them online, there is no need for note-taking, allowing participants to remain engaged throughout the session. Elements of the session involve: a pre-session technology self-assessment, an online group discussion via Padlet, think pair share elements, and lastly self-reflection elements submitted during and after the session. More details on these elements are provided below.

Length. The Academy hosts local sub-organizations in each state. I chose to develop this professional development session for local dietitians and nutrition educators with the opportunity to present at the local education conference held annually. The requirements of this local organization state that all educational sessions must be a minimum length of one hour. This is to meet the CEU (continuing education unit) minimum for registering dietitians. Considering that through the DEL program we have taken entire classes dedicated to active learning and digital tools, the length will limit the depth of information presented. However, the ability to continually collaborate with both participants and presenter will allow for continued resource sharing after the session has ended.

Active, engaged learning with collaborative participation. Participants will be encouraged to participate and collaborate before, during, and after the session for a full engagement experience. The audience will be asked to review certain elements of the presentation website available here intermittently as they discuss key elements with the participants next to them. See figure 1.1 for lesson plan details.

| Building Better Nutrition Education Through Digital Collaboration Tools |

| Objectives

Session Goal: Introduce ways to incorporate digital collaboration tools into existing nutrition education lesson plans. Learning Objectives: At the end of the session participants will:

|

Performance Tasks

|

Plan Outline

Total session length: 60 mins. |

Figure 1.1 “Building Better Nutrition Education through Digital Tools” Session Lesson Plan.

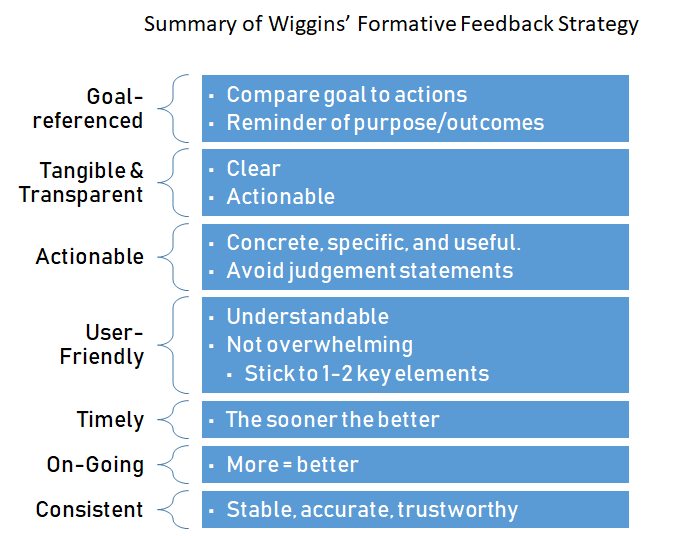

Before the presentation, the participants will be invited to a google form self-assessment poll addressing comfort and knowledge with technology tools as well as their current use of technology tools in practice. During the presentation, the audience will be prompted to participate in “think, pair, share” elements, as well as, respond to collaboration tools prompts on padlet, google forms, and embedded websites. After the presentation, participants will be encouraged to summarize their learning by submitting a flipgrid video.

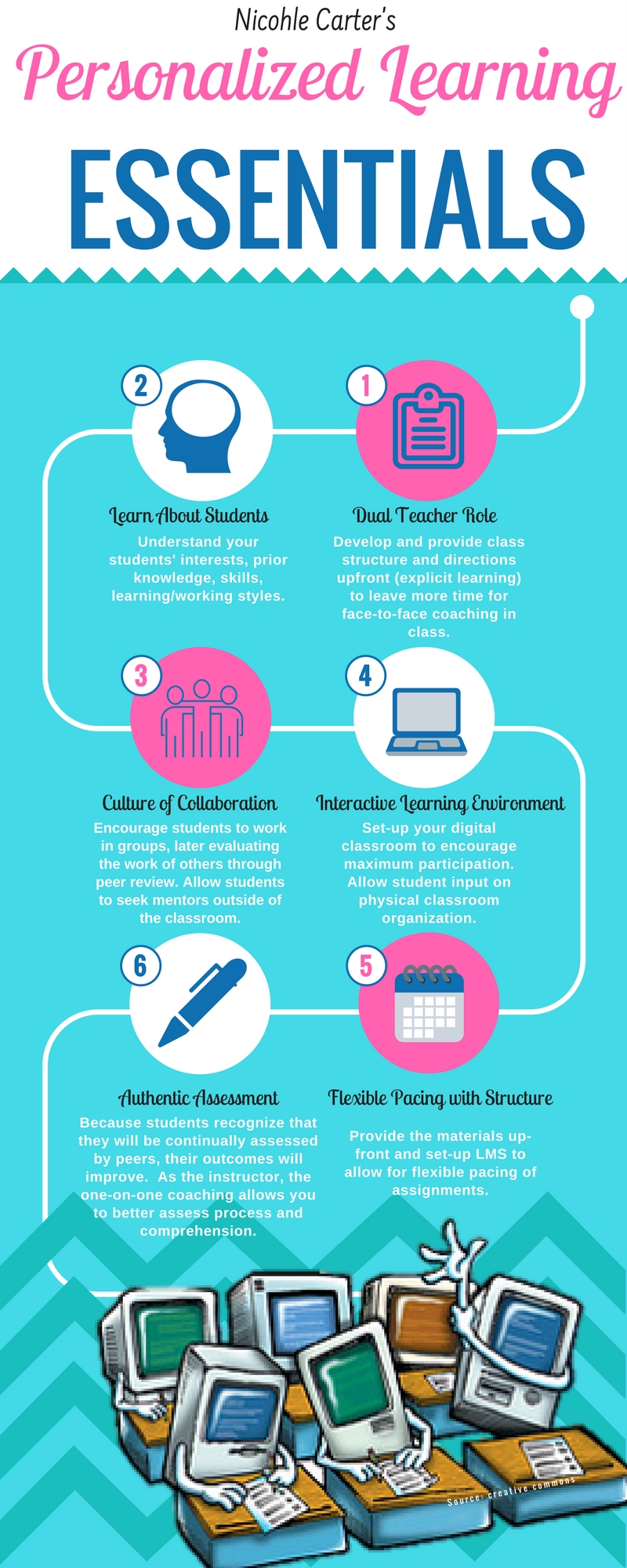

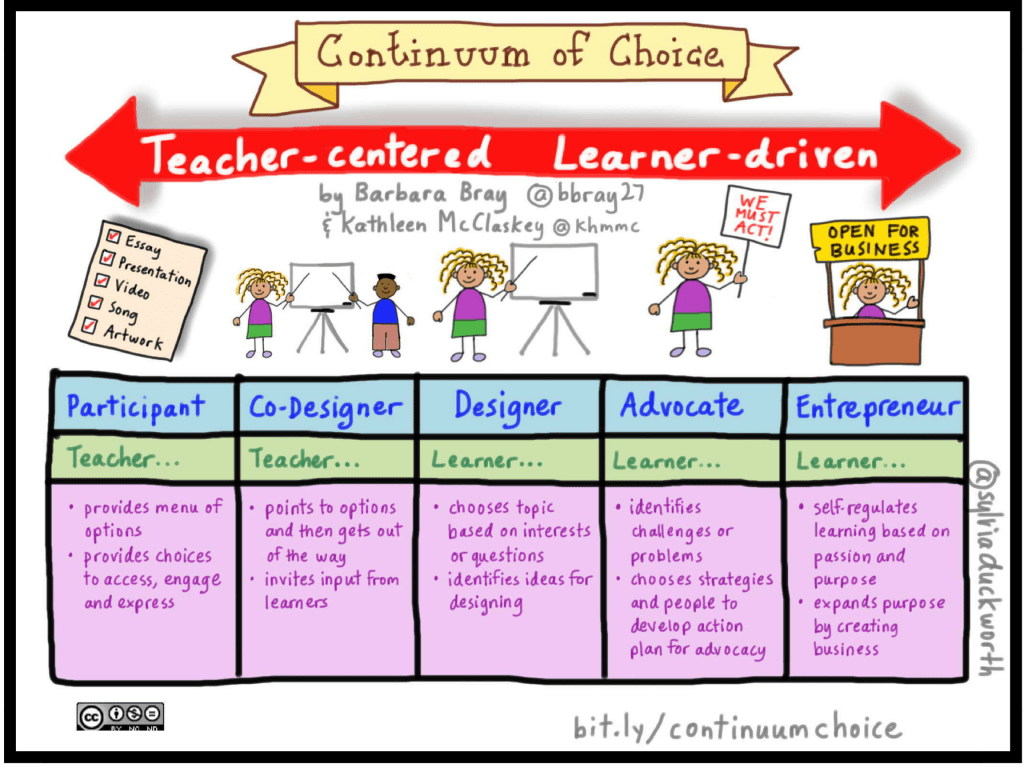

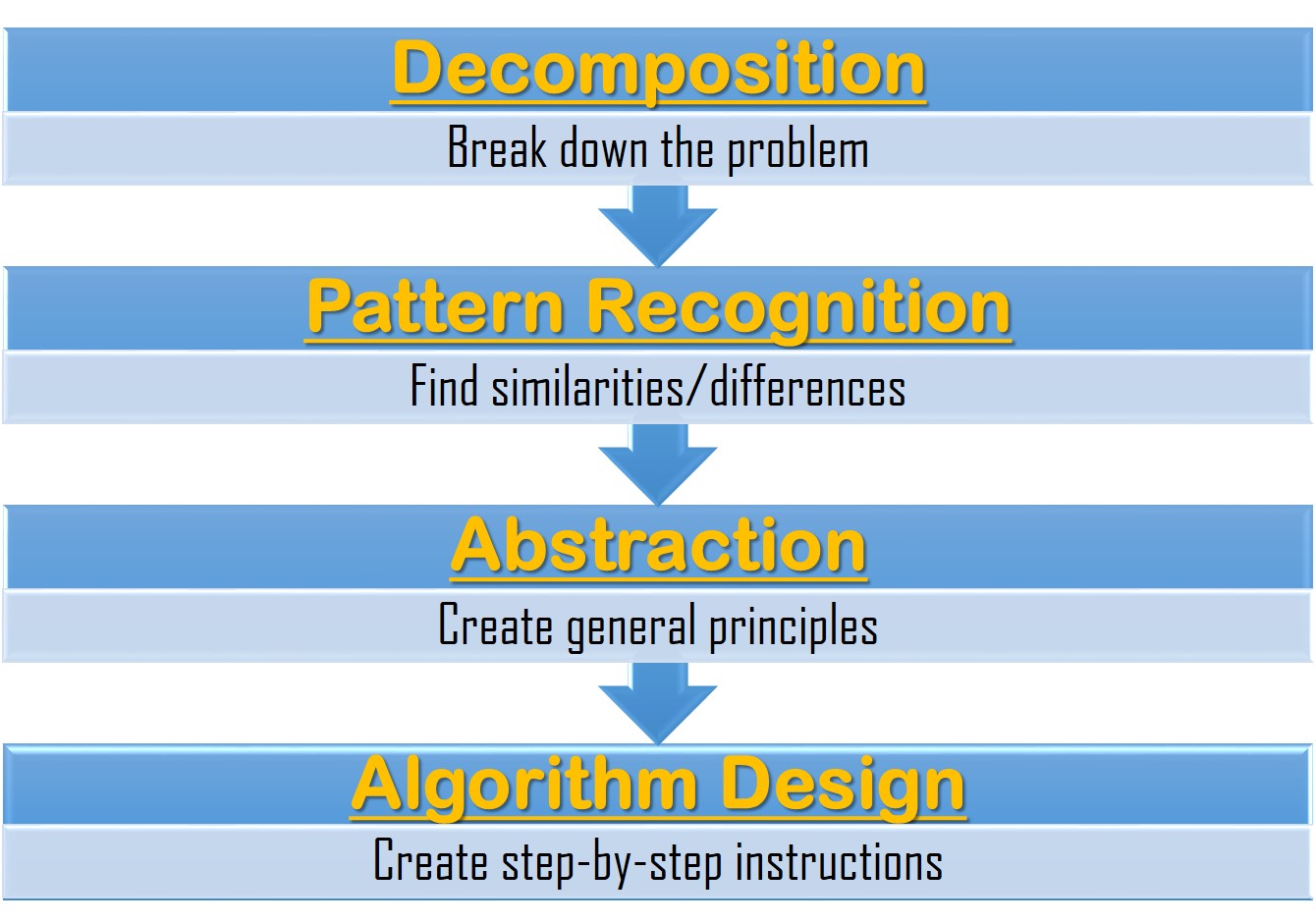

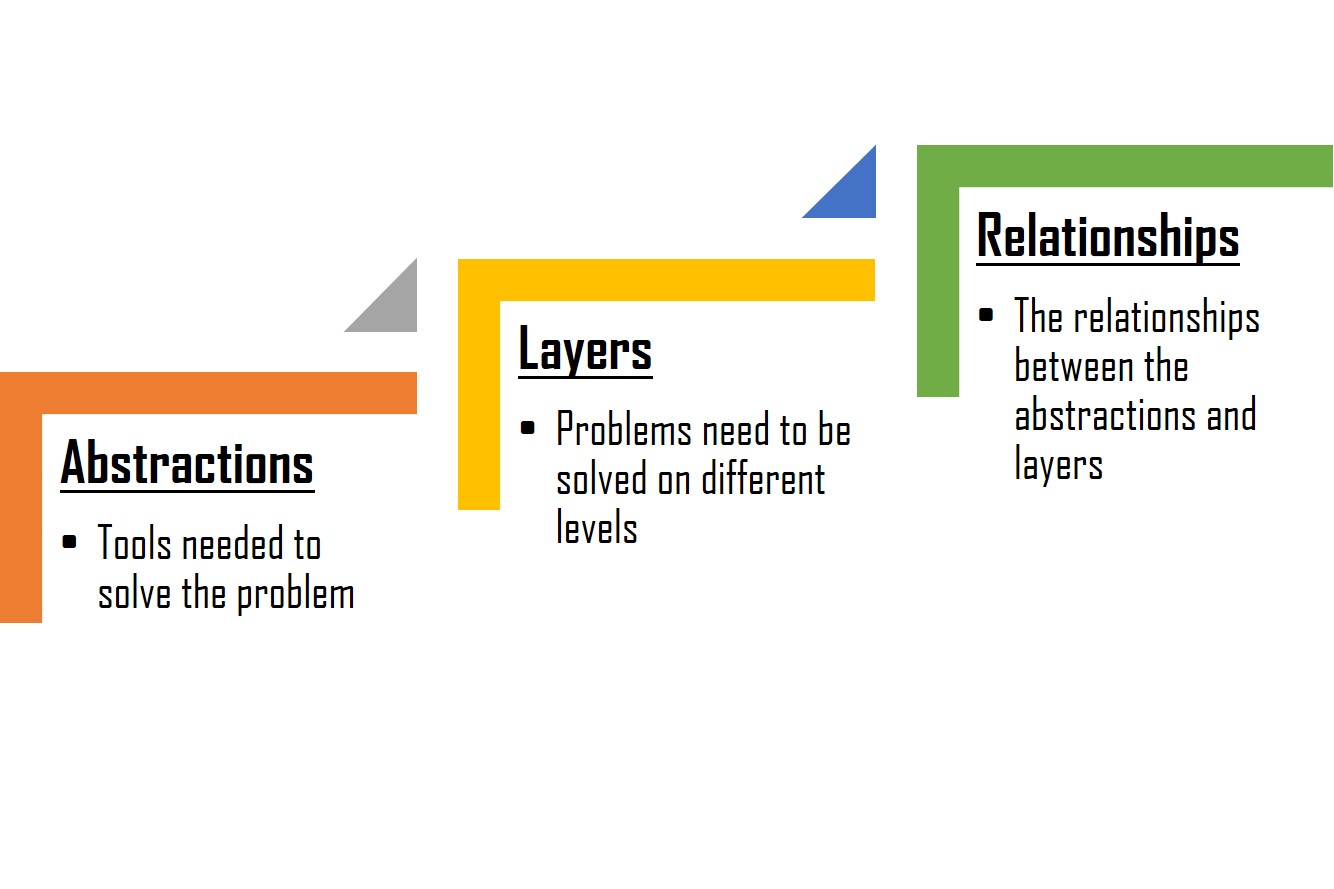

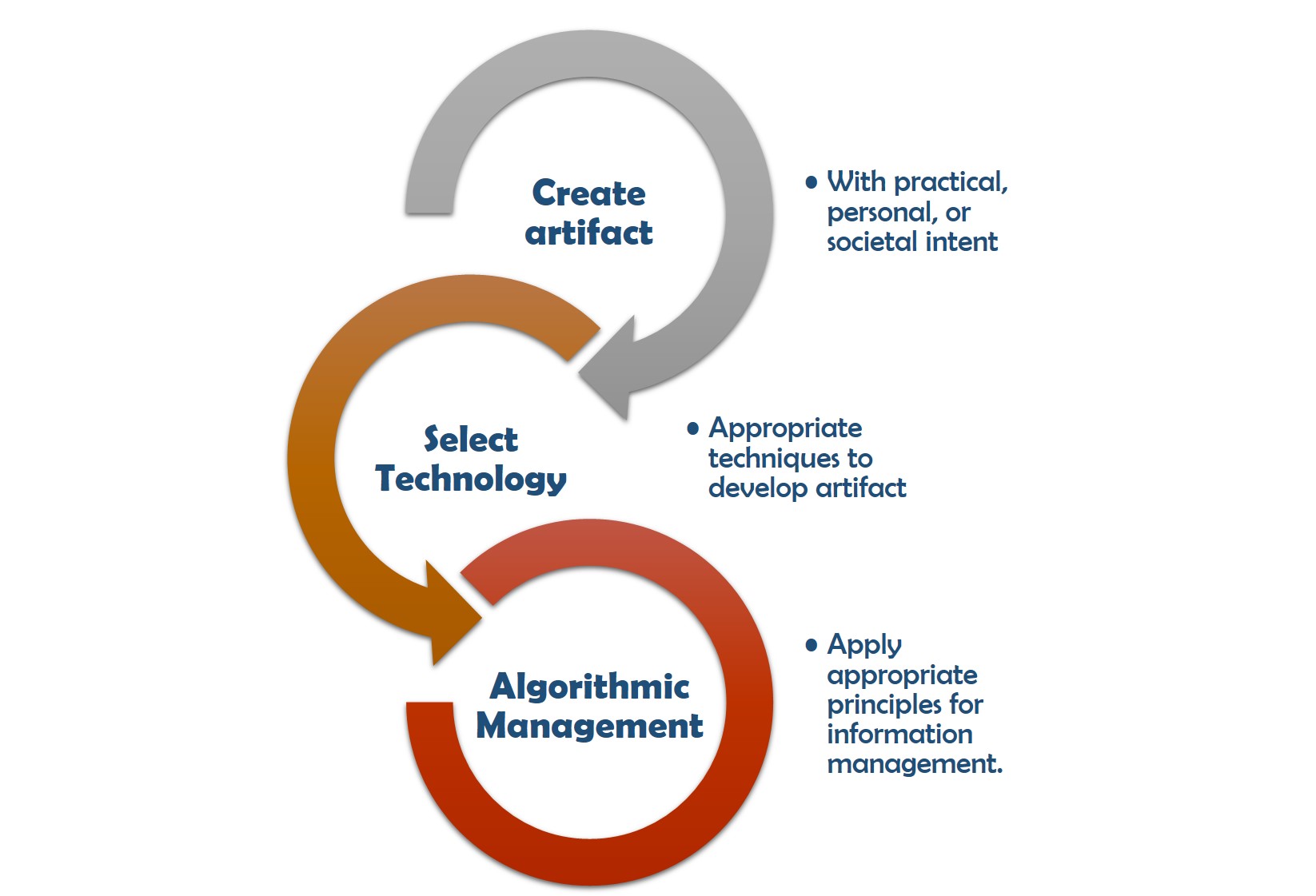

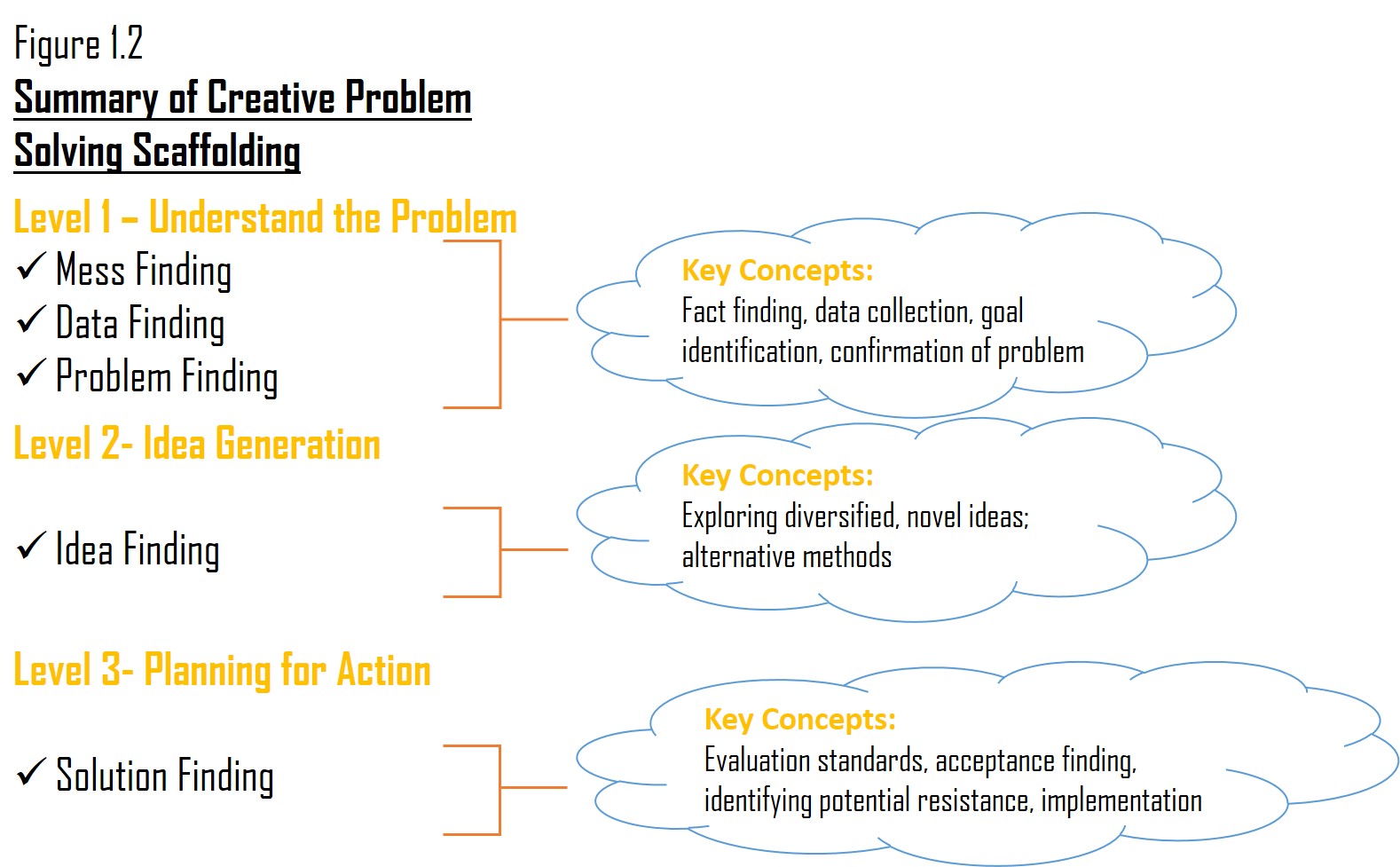

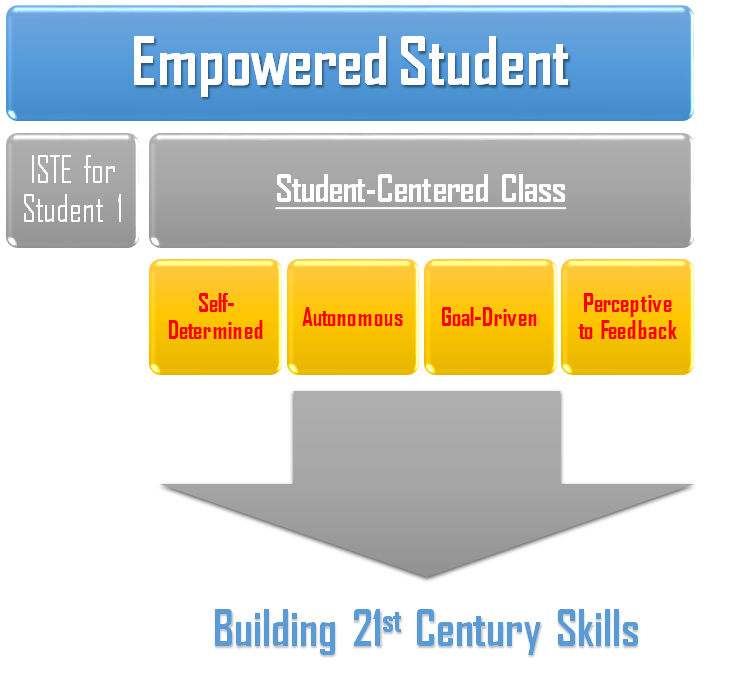

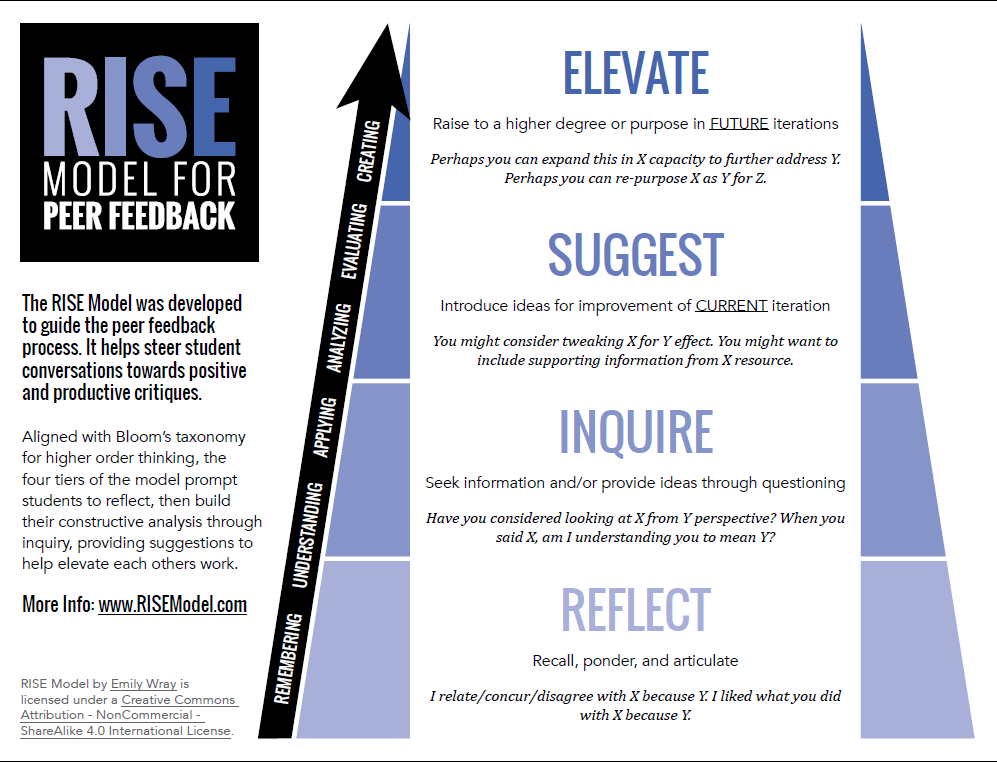

Content knowledge needs. The session content begins with establishing the importance of active learning as evidence-based practice to meet objectives a) and b). Just as motivational interviewing and patient-centered practice is desirable in nutrition, active learning invoking 21st century skills is evidence-based and an education standard. The content will then shift into teacher-focused how-tos for digital tools including how digital tools can help, how to select the right digital tool, and how to incorporate that tool into an existing lesson plan to address objectives c) and d). My assumption is that participants who are not comfortable with technology may be fearful or lack of motivation to explore various tools. Group collaboration, modelling and gentle encouragement through case studies may help mitigate these fears.

Teachers’ needs. While the majority of the session focuses on introductory content to active learning and digital tools, teacher’s needs in digital tool management can be addressed through coach/presenter modeling. Simple statements such as, “I created this flipgrid video to serve as a model for students.” or “This google form was hyperlinked to gauge students’ understanding so far,” can serve as a basis to explore class management and digital tool management within the limited time. The website itself offer a section on FAQs, exploring questions and misconceptions about active learning and digital tools. Even with all of these resources, the audience will be introduced to technology coaching and may choose to consult a coach at their current institution.

In addition to modeling, three tutorial videos are available on the website to help teachers begin creating their own active learning lesson plans using the backwards design model. Each of the tutorials features closed captioned created through TechSmith Relay for accessibility. The Google Site was also chosen because content is made automatically accessible to viewers, all the website creator has to do is include the appropriate heading styles and use alt text for pictures, figures, and graphs.

Lessons Learned through the Development Process.

One of the major challenges to developing this project was understanding the needs of the target audience. Because nutrition informatics is relatively new, technology use has not be standardized in the profession, therefore estimating the previous knowledge and use of digital tools by the audience was difficult. My assumption is that technology use and attitudes about technology will be varied. The website attempts to breakdown information to a semi-basic level. The only assumption I made was that the audience has good background in standard nutrition education practices. I also chose to develop the Technology Self-Assessment for the audience to complete prior to the session as a way to gain some insight into current technology use and comfort so that I may better tailor the session to that particular audience’s needs.

I realized as I was developing the lesson plan for this session that I only have time to do a brief introduction to these very important topics. If I were to create a more comprehensive professional development, I could expand the content into three one-hour sessions including 1) introduction and theory to collaborative learning which would address the importance of digital tools in nutrition education and establish need for active learning, 2) selecting, evaluating, and curating tech tools allowing educators to become familiarized with available tools based on individual need, and 3) lesson plan development integrating collaboration tools, a “how-to” session where participants create their own plan to implement. I had not anticipated that length was going to be a barrier, however, if the audience truly has limited digital familiarity and comfort, perhaps beginning with an introduction to these topics is sufficient.

One positive lesson that I’ve learned is that trying new things, such as creating a Google Site, can be very rewarding. I have never experimented with Google Sites prior to this project and I am quite happy with the final website, though the perfectionist in me wants to continue tweaking and editing content. I originally was aiming to create slides for this presentation but realized that I am attempting to convince a possibly skeptical audience on the benefits of digital tools so using the same old tool would not allow me to do the scope of modelling I desire.

I must admit that before this project, I had a hard time placing myself into the role of a “tech coach” because I would continually see each concept through the lens of an educator and how to apply the concepts to my own teaching. It has been difficult for me to take a step back and realize that I am teaching but just in a different context. Creating the step-by-step tutorials was the turning point where I envisioned the audience modeling their lesson plans to the example I had given. I hope I have the opportunity to present this session at the educational conference and bring the ideals of active learning and digital tools to professionals working in various education settings.