Digital tools in the classroom is an asset to learning. According to the U.S. Department of Education, technology in the classroom ushers in a new wave of teaching and learning that can enhance productivity, accelerate learning, increase student engagement and motivation, as well as, build 21st century skills, (U.S. Department of Education, n.d.). The offerings of technology tools for the classroom are plentiful as priorities shift to support a more integrated education. Educators now have several options for cultivating digital tools to better engage students, promote active learning, and personalize instruction. But choosing the right tools can be challenging especially considering that educators face a seemingly overwhelming array of options. How would can educators filter through all of the options to select the best tool(s) for their classroom?

Enlisting the help of a technology coach who can systematically break down the selection process to ensure that the most appropriate tools are used is part of the solution. In following with best practices, the third ISTE standard for coaching (3b) states that in order for tech coaches to support effective digital learning environments, coaches should manage and maintain a wide array of tools and resources for teachers, (ISTE, 2017). In order to cultivate those resources, coaches themselves need a reliable way to select, evaluate, and curate successful options. Much like an educator may use a rubric or standards to assess an assignment’s quality, coaches can develop specific criteria (even a rubric) to assess quality of technology tools.

Tanner Higgin of Common Sense Education understands the barrage of ed tech tools and the need for reliable tech resources, which is why he published an article describing what makes a good edtech tool great. The article seems to be written more from a developer’s point of view on app “must-haves”, however Higgin also makes reference to a rubric used by Common Sense Education to evaluate education technology. He mentions the fact that very few tech tools reviewed receive a 5 out of 5 rating which makes me assume that Common Sense Education has a rigorous review system in place. I was curious to learn what criteria they use to rate and review each tool and/or so I investigated their rating process. In the about section on their website, Common Sense Education mentions a 15-point rubric which they do not share. They do share, however, the key elements included in their rubric: engagement, pedagogy, and support, (Common Sense Education, n.d.). They also share information about the reviewers and how they decide which tools to review. This information serves as a great jumping off point in developing criteria for selecting, evaluating, and curating digital tools. Understanding the thought process of an organization that dedicates their time and resources for this exact purpose is useful for tech coaches in developing their own criteria.

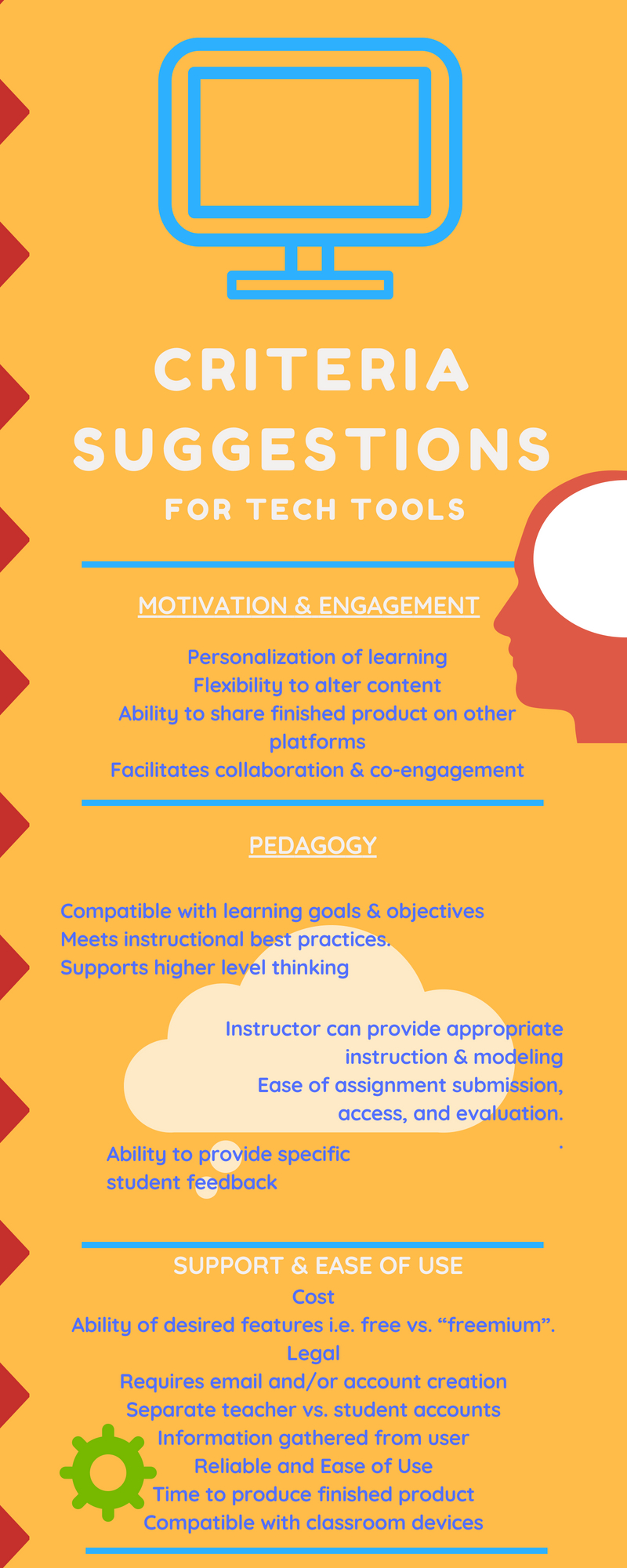

Continuing the search for technology tool evaluation criteria led me to several education leaders who share their process through various blog posts and articles. Reading through the criteria suggestion, a common theme started to develop. Most of the suggested criteria fit under the umbrella terms defined by Common Sense with a few modifications, which are synthesized in figure 1.1 below.

There is consensus among the educational leaders who placed emphasis on engagement and collaboration features of the tool. Tod Johnston from Clarity Innovations noted that a good tech tool should allow for personalization or differentiation of the learning process that also allowed the instructor to modify the content as needed for each class, (Johnston, 2015). ISTE author, Liz Kolb added to this by stating that tools that allow for scaffolding help to better engage differentiation, (Kolb, 2016). Both Edutopia and ISTE authors agreed that sociability and shareability of the platform was important to engage students in wider audiences, (Hertz, 2010, & Kolb, 2016).

While engagement was a key element of selecting a tech tool for the classroom, even more important was how the tool fared in the realm of pedagogy in that first and foremost the technology needs to play a role in meeting learning goals and objectives, (Hertz, 2010). Secondly, the tool should allow for instructional best practices including appropriate methods for modeling and instruction of the device, and functionality in providing student feedback, (Hertz, 2010 &, Johnston, 2015). Another pedagogical consideration is the ability of the platform to instill higher level thinking rather than “skill and drill” learning, (Kolb, 2016). Specific rubrics on pedagogy such as the SAMR and TRIPLE E framework models has been created and can be used in conjunction with these principles.

Support and usability was among the top safety concerns for evaluating these tools. Cost and the desired features accessed within cost premium was among these concerns particularly when students needed to create an account or needed an email was a concern, (Hertz, 2010). Hertz called this issue free vs. “freemium”, meaning that some apps only allow access to limited functionality of the platform while full functionality could only be accessed through purchase of premium packages. If the platform was free, the presence of ads would need to be accessed, (Hertz, 2010). In terms of usability, coveted features such as easy interface, instructor management of student engagement, and seperate teacher/student account were desirable, (Johnston, 2015). Along with cost and usability, app reliability and compatibility with existing technology was also listed as important features, (Johnston, 2015).

The evaluation process itself varied from curated lists of the top tech tools, criteria suggestions, even completed rubrics. If those don’t quite apply to a specific evaluation process, a unique approach would be to convert the rubric into a schematic like the one shared from Denver Public Schools where each key evaluation element could be presented as a “yes” or “no” question with a “yes, then” or “no, then” response following a clear decisive trajectory for approval or rejection.

What I’ve learned through the exploratory process of developing evaluation criteria for tech tools is that It is not important or necessary that a tool meet every single criteria item. Even the educational and tech experts reviewed in this blog emphasized different things in their criteria. In his blog, Tod Johnston suggests that there is no right or wrong way to evaluate technology tools because this isn’t a cookie cutter process. Just like all teachers have a different style and approach to teaching so would their style and approach to using tech tools. The key to evaluating tools to to find the one that best fits the teacher’s needs, (Johnston, 2015).

Resources

Common Sense Education., (n.d.). How we rate and review. Available from: https://www.commonsense.org/education/how-we-rate-and-review

Hertz, M.B., (2010). Which technology tool do I choose? Available from: https://www.edutopia.org/blog/best-tech-tools

ISTE, 2017. ISTE standards for coaches. Available from: https://www.iste.org/standards/for-coaches.

Kolb, L., (2016, December 20). 4 tips for choosing the right edtech tools for learning. Available from: https://www.iste.org/explore/articleDetail?articleid=870&category=Toolbox

Johnston, T. (2015). Choosing the right classroom tools. Available from: https://www.clarity-innovations.com/blog/tjohnston/choosing-right-classroom-tools

Vincent, T. (2012). Ways to evaluate educational apps. Available from: https://learninginhand.com/blog/ways-to-evaluate-educational-apps.html

U.S. Department of Education., (n.d.). Use of technology in teaching and learning. Available from: https://www.ed.gov/oii-news/use-technology-teaching-and-learning.