In its intention, professional development offers an opportunity for individuals to learn about new advancements in their respective field, including industry best practices. However, professional development (PD) is criticized for its inability to offer either content, format, or context that is relevant. In the DEL program, we were asked for our opinions on what makes for good professional development (PD). I reflected upon my experiences and noted that good professional development should be actionable, timely, and applicable. PD should focus less on the “what” and more on the “how”. My colleagues commented on the fact that good PD is characterized by interaction, relevancy, purposefulness, and focused on the learner. On the other hand, bad PD can be characterized as singular, stoic, and passive. Looking back on my own experiences, I remember one PD training I took that was a five-hour long video of a therapist droning on about the physiology of stress. While the topic was interesting (for about half an hour) without any engagement or application, the training suddenly felt like an endless lecture. More so, what makes it bad is that the PD worked on the premises that bombardment of facts equates into deep knowledge, however, “having knowledge in and of itself is not sufficient to constitute as expertise,” (Gess-Newsome, et. al., n.d.).

Criteria for Good Professional Development.

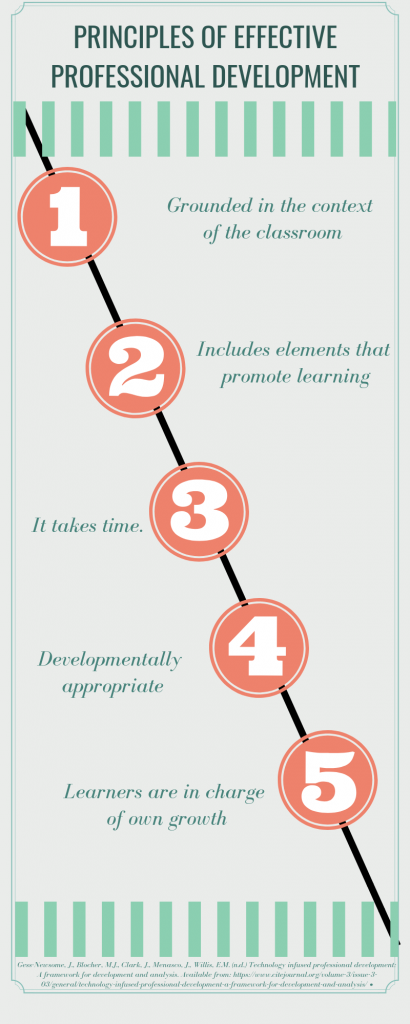

Because my colleagues and I all work in education and have experienced our fair share of PD, both good and bad, we were able to use our personal experience to determine the above criteria. Research on how we (humans) learn demonstrates that my classmates and I were not wrong. The goal of any professional development should impact student learning by augmenting knowledge in pedagogical content knowledge, (Gess-Newsome, n.d.). In other words, the main idea behind PD is to help individuals become experts. According to Gess-Newsome, et. al, expert knowledge is deep, developed over time, contextually bound, organized, and connected to big ideas, (Gess-Newsome et. al., n.d). This is interesting considering that most PD is offered in one timeframe at about an hour, hardly enough to begin the application and reflection necessary for that content to become “expert knowledge.”

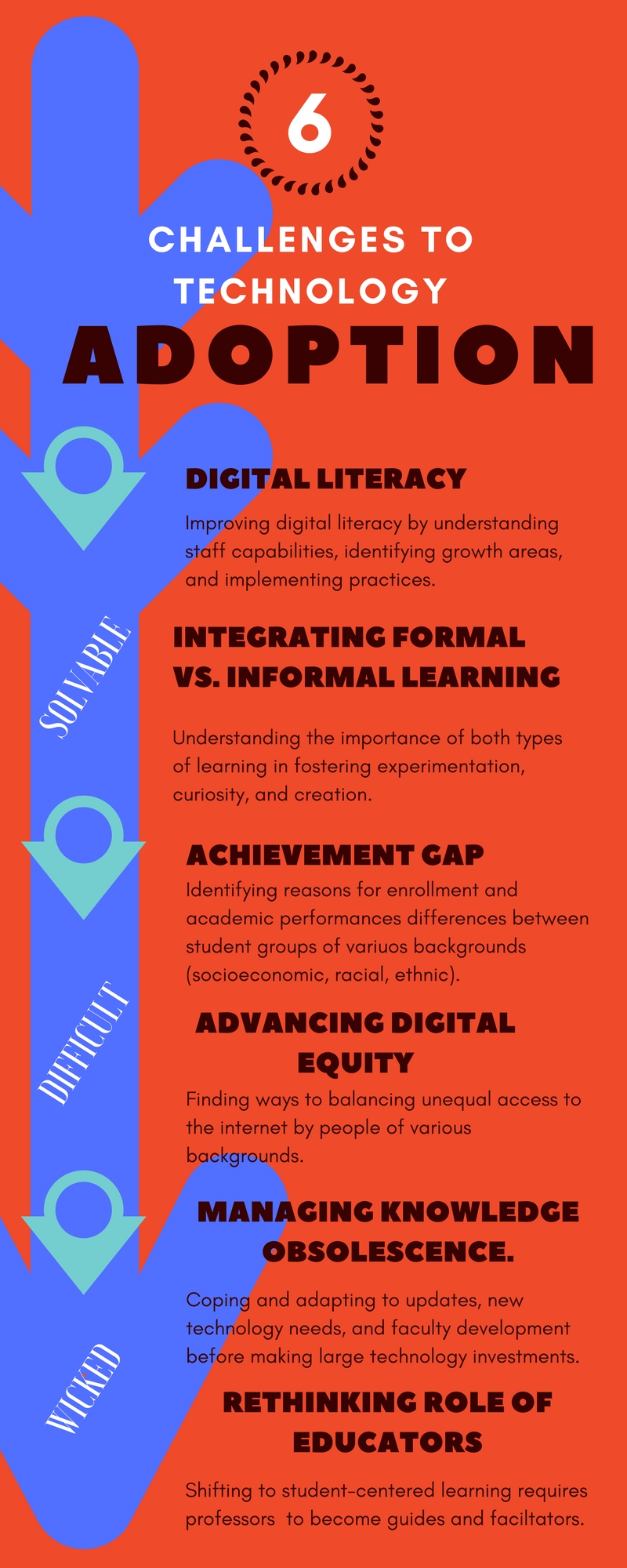

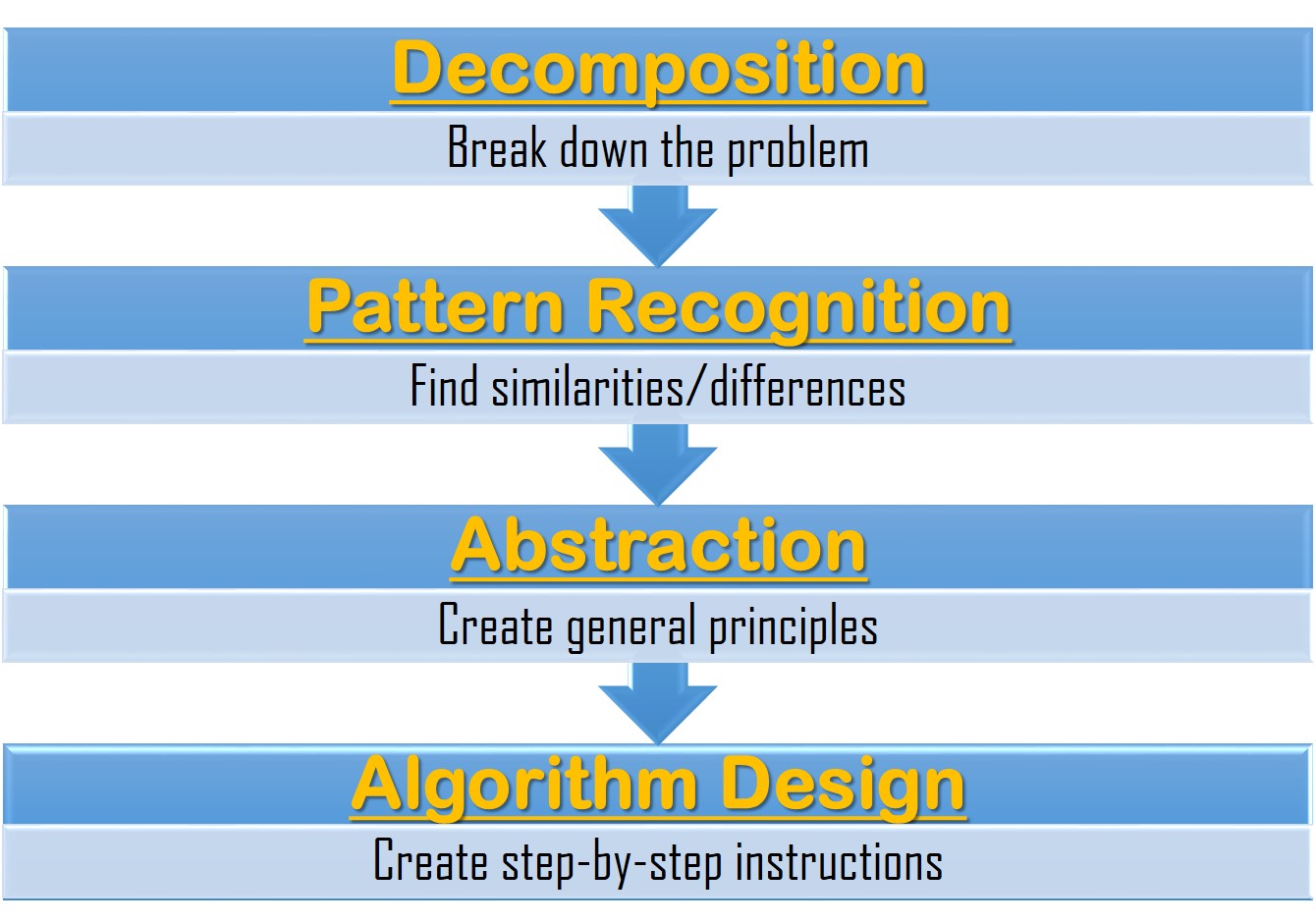

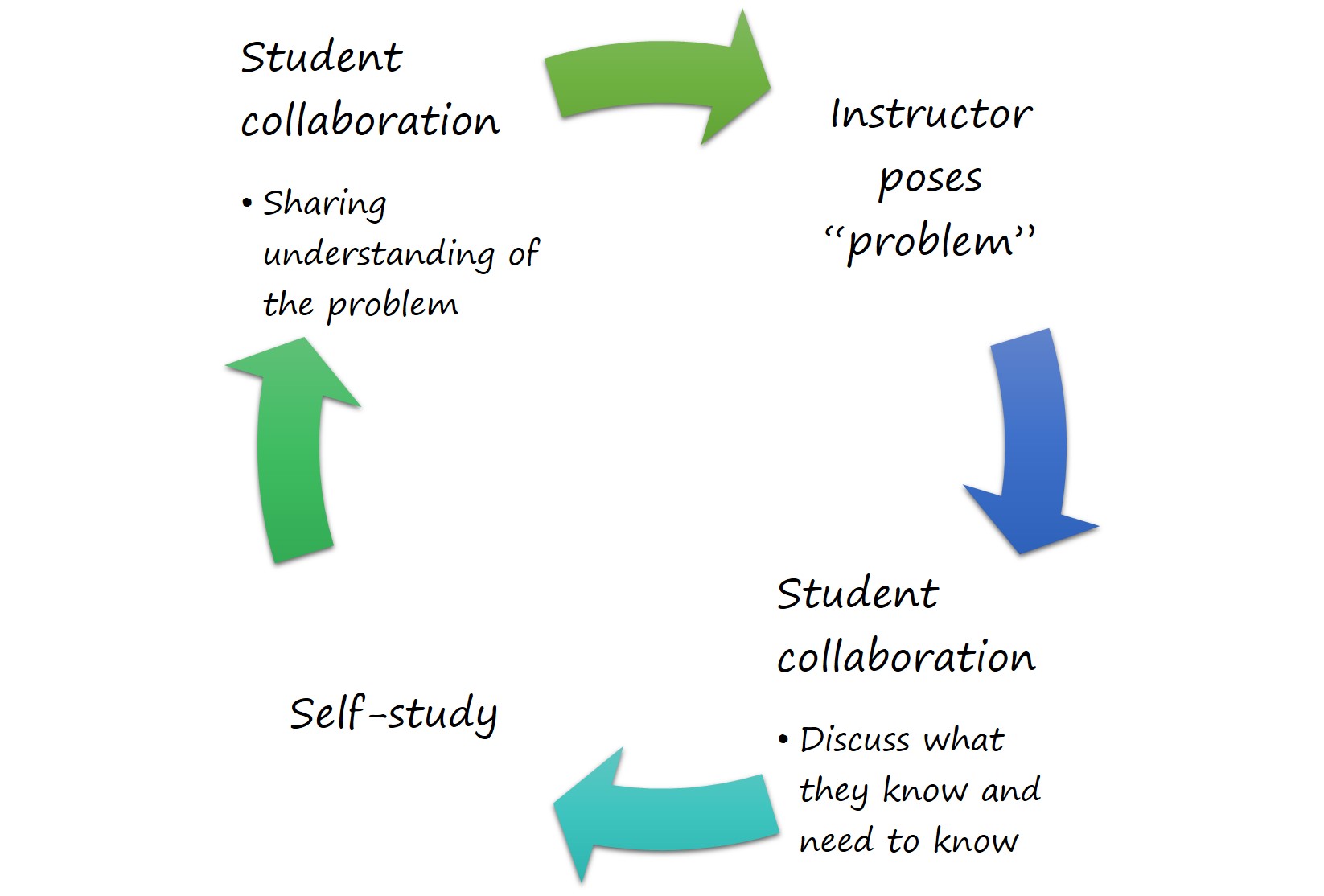

What most PD, including my example of bad PD, is lacking is the opportunity to apply and reflect. Research on how we learn notes that learning needs two elements, 1) a social context which helps us to maintain high levels of motivation (because learning takes incredible amounts of effort) and, 2) an active component that allows the learner to engage with ideas that can either create new experiences, build opportunities to acquire knowledge, or directly challenge what we already know, (Gess-Newsome, et. al., n.d.). Engaging the learner also takes into consideration that learners will come into the session with their own conceptions and preconceived notions based on their current learning needs. To include all of these factors, the researchers from Northern Arizona University, strongly recommend the five principles of effective professional development summarized in figure 1.1 below.

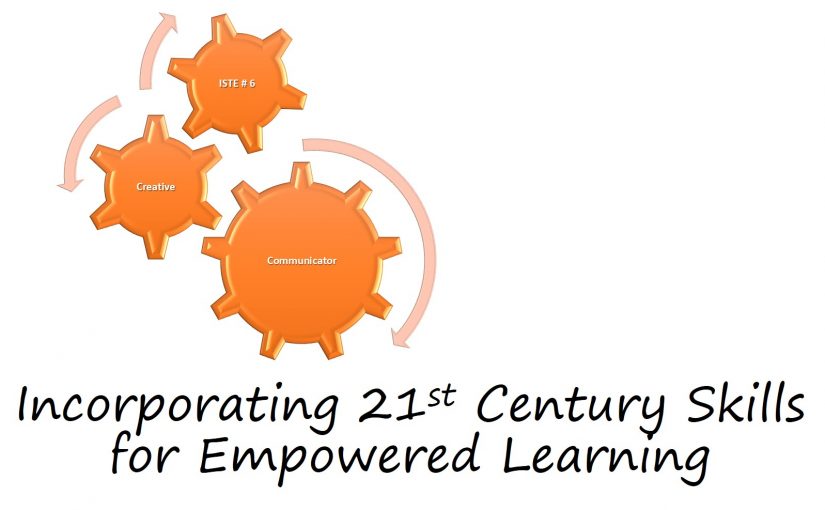

The ISTE Standard 4b explores the properties of good professional development by defining the coach’s role as, “design[ing], develop[ing], and implement[ing] technology rich professional learning programs that model principles of adult learning and promote digital age best practices in teaching, learning, and assessment.” (ISTE, 2017). The standard highlights all of the principles of effective PD. Coaches should be able deliver PD that meets the needs of the learner within the context that is relevant to the learner.

While understanding the theory behind effective PD is important, on a personal level, applying these theories will prove crucial in the upcoming months as I was asked to facilitate a professional development session at a conference. My audience will be mixed group of registered dietitians with various levels of expertise in both nutrition education and technology. Understanding the need to develop effective PD, I realized it will be important to also understand which professional development model works best for audiences of mixed technology skill for me to meet learners’ needs.

After some investigation and feedback, it appears the best approach to address this inquiry will be in two parts, 1) understanding models for technology-infused PD, and 2) understanding the principles of learning differentiation.

Technology-Infused Professional Development.

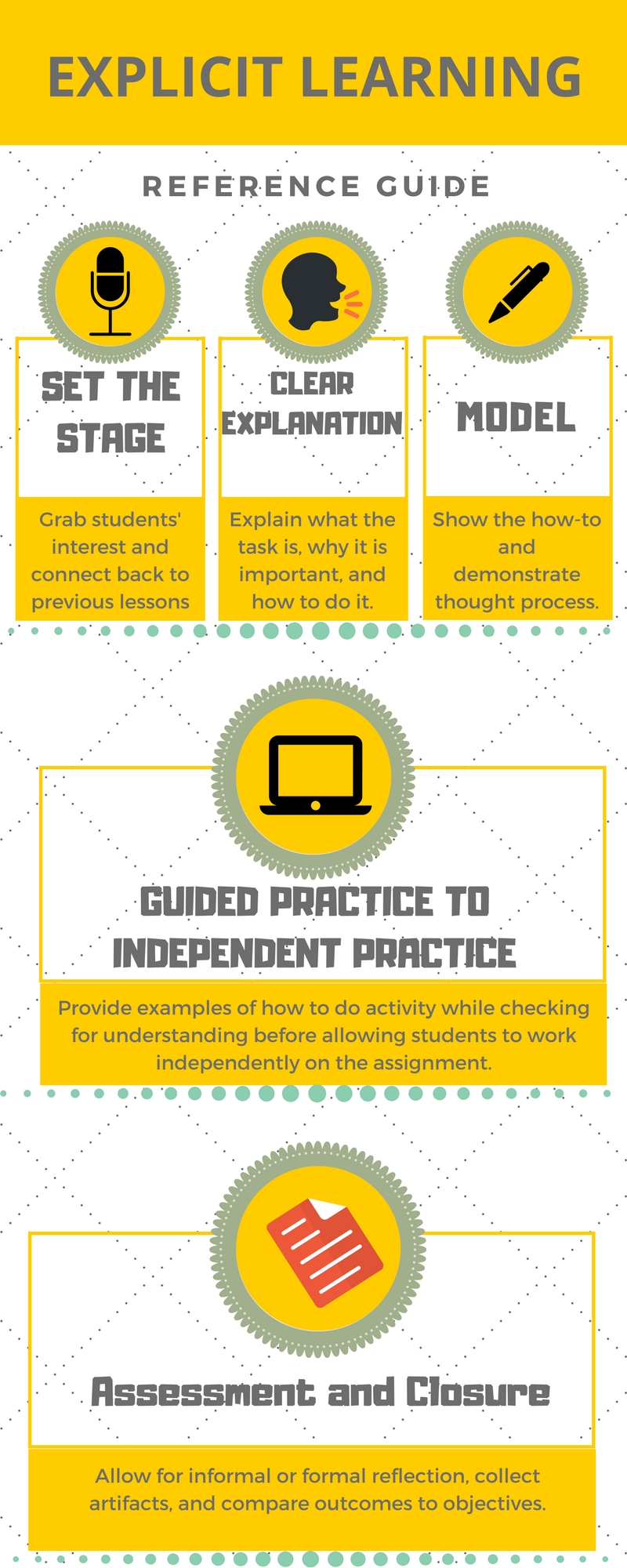

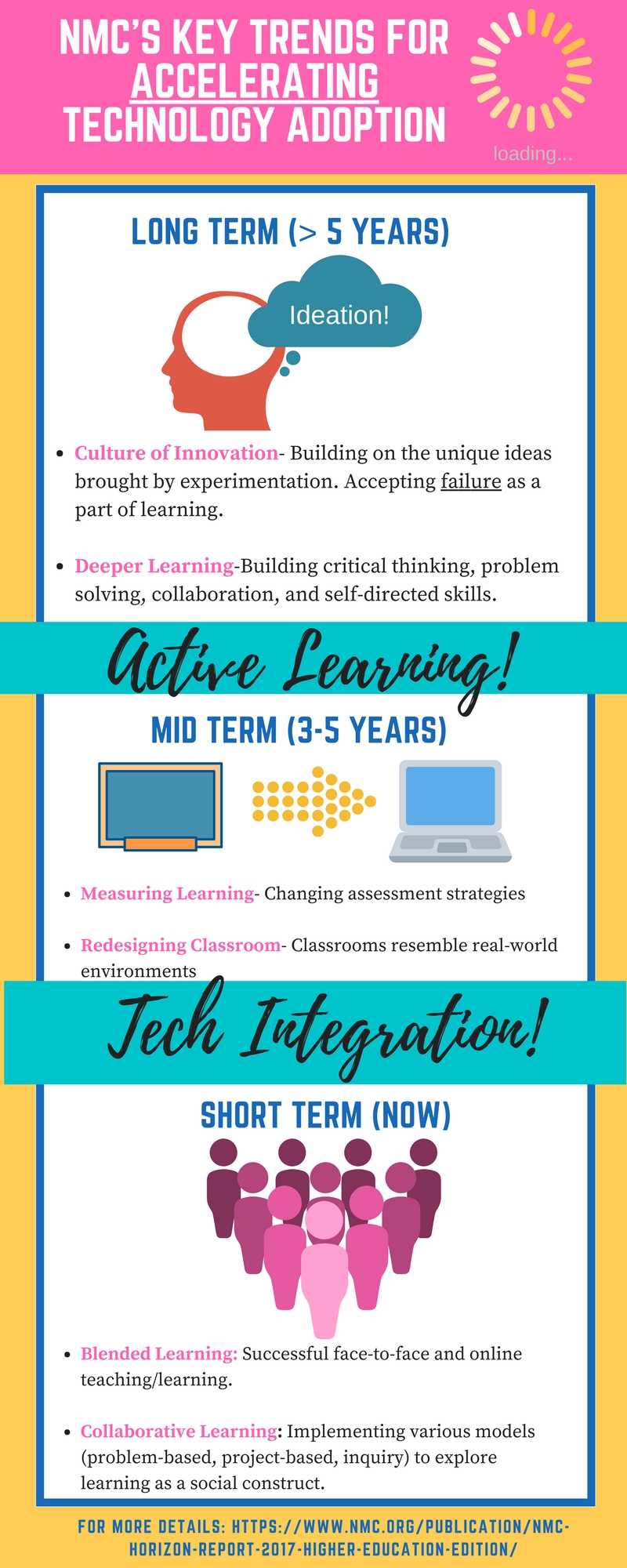

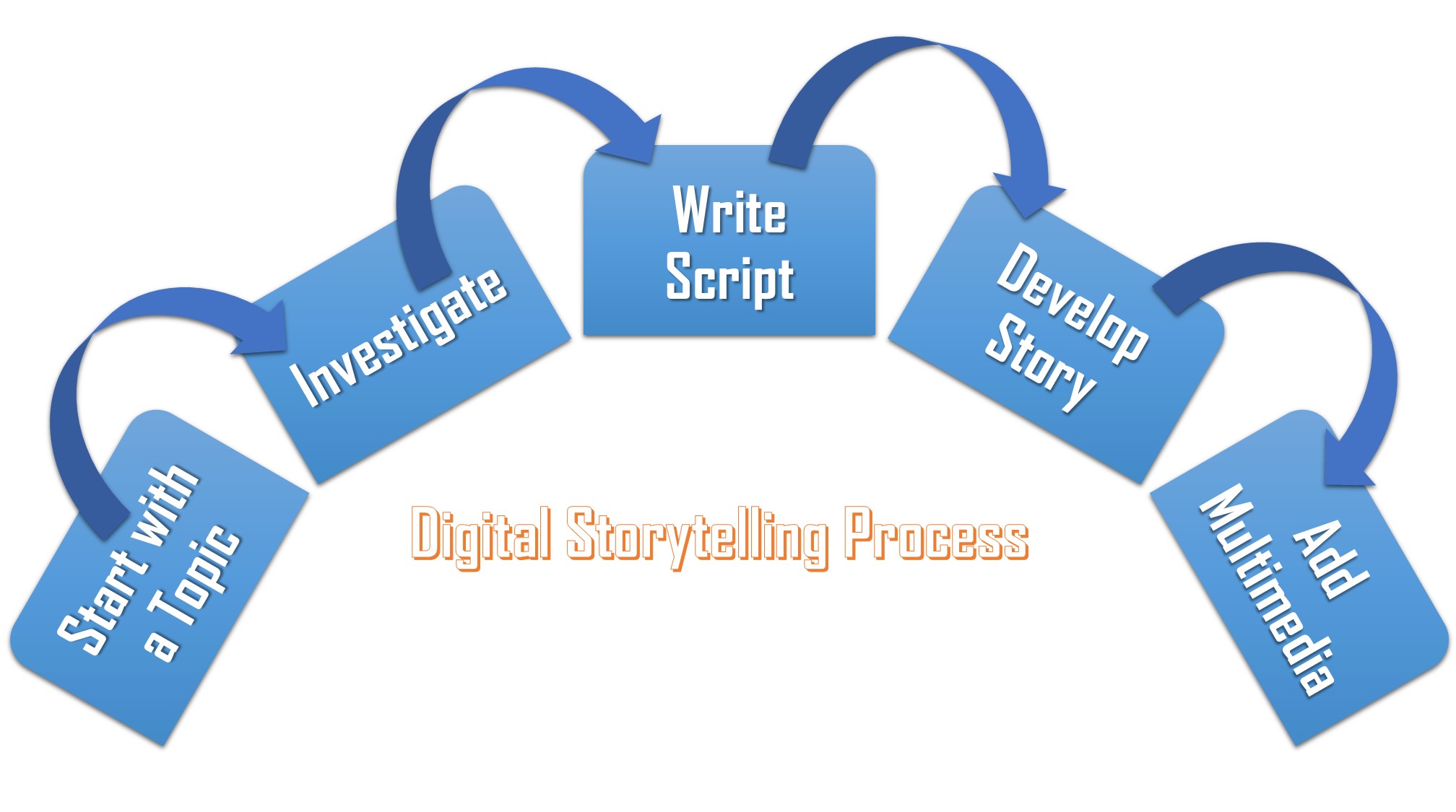

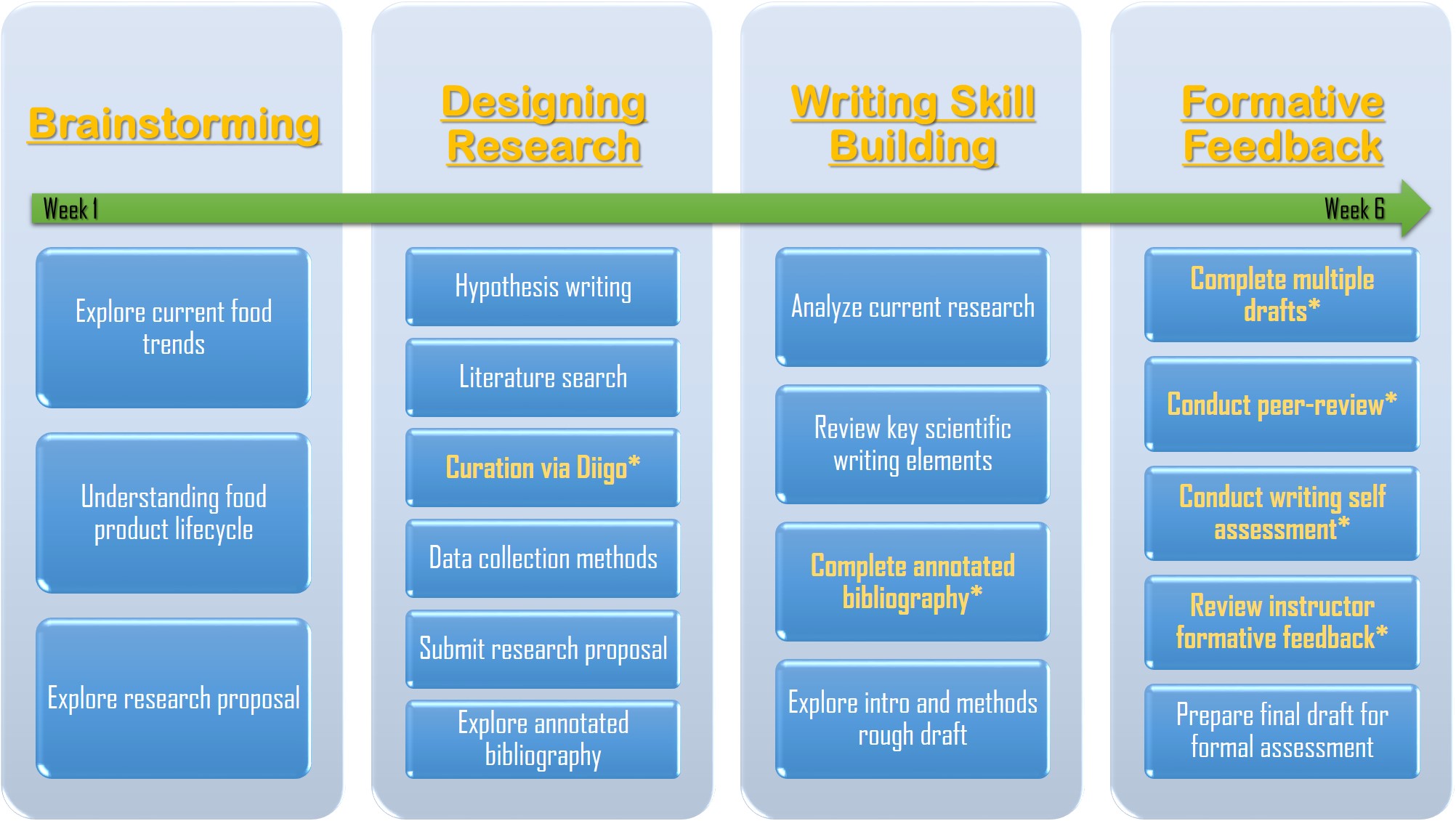

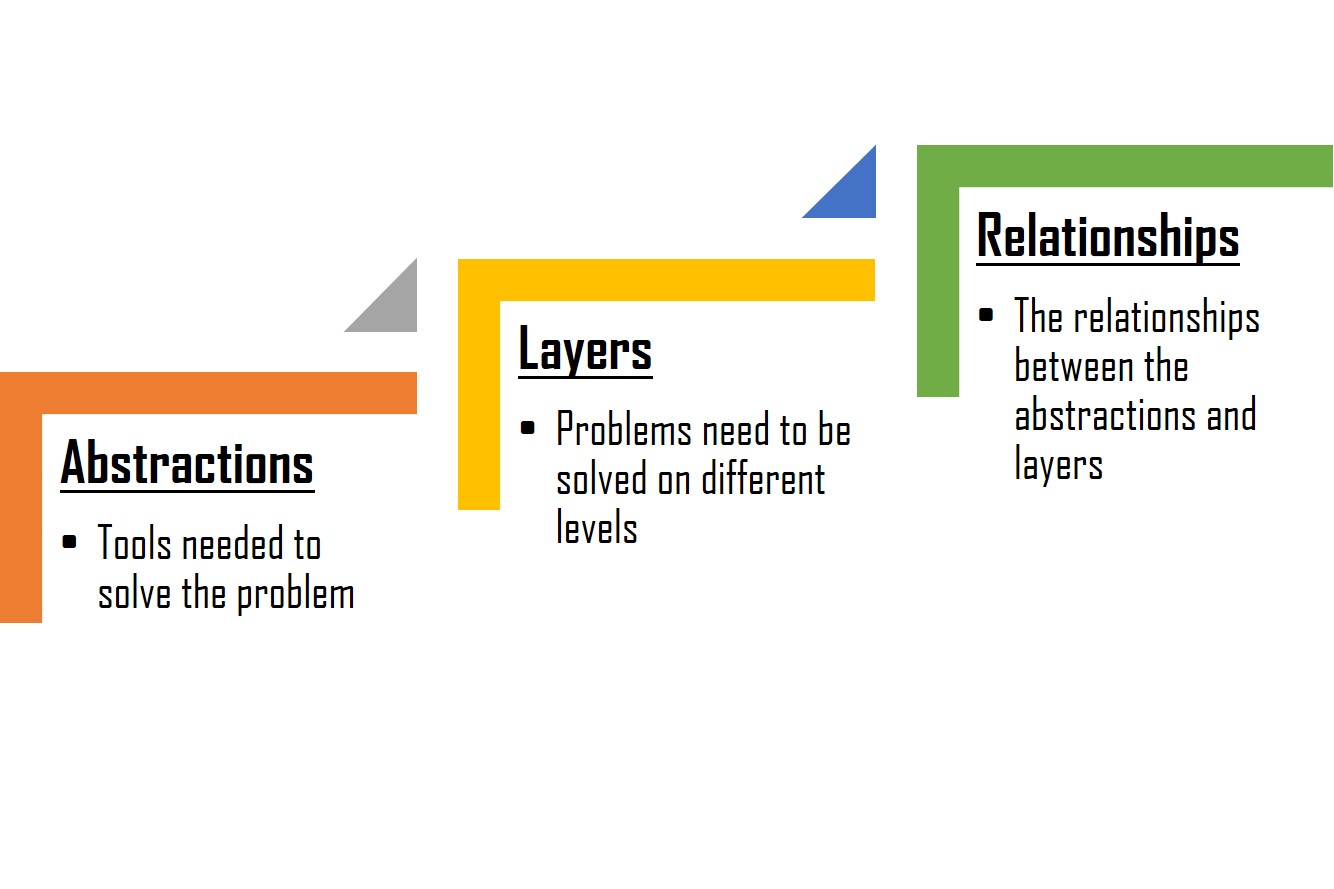

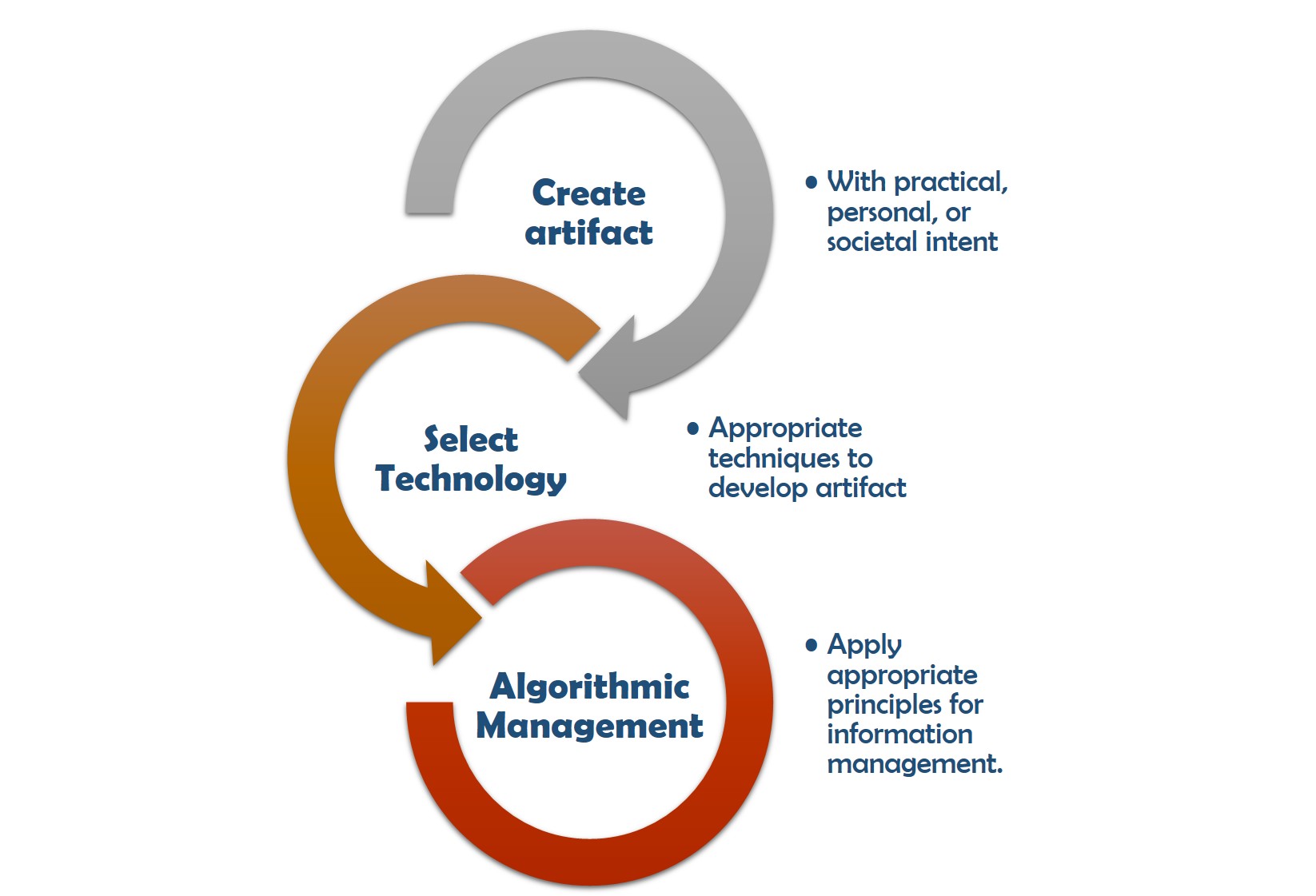

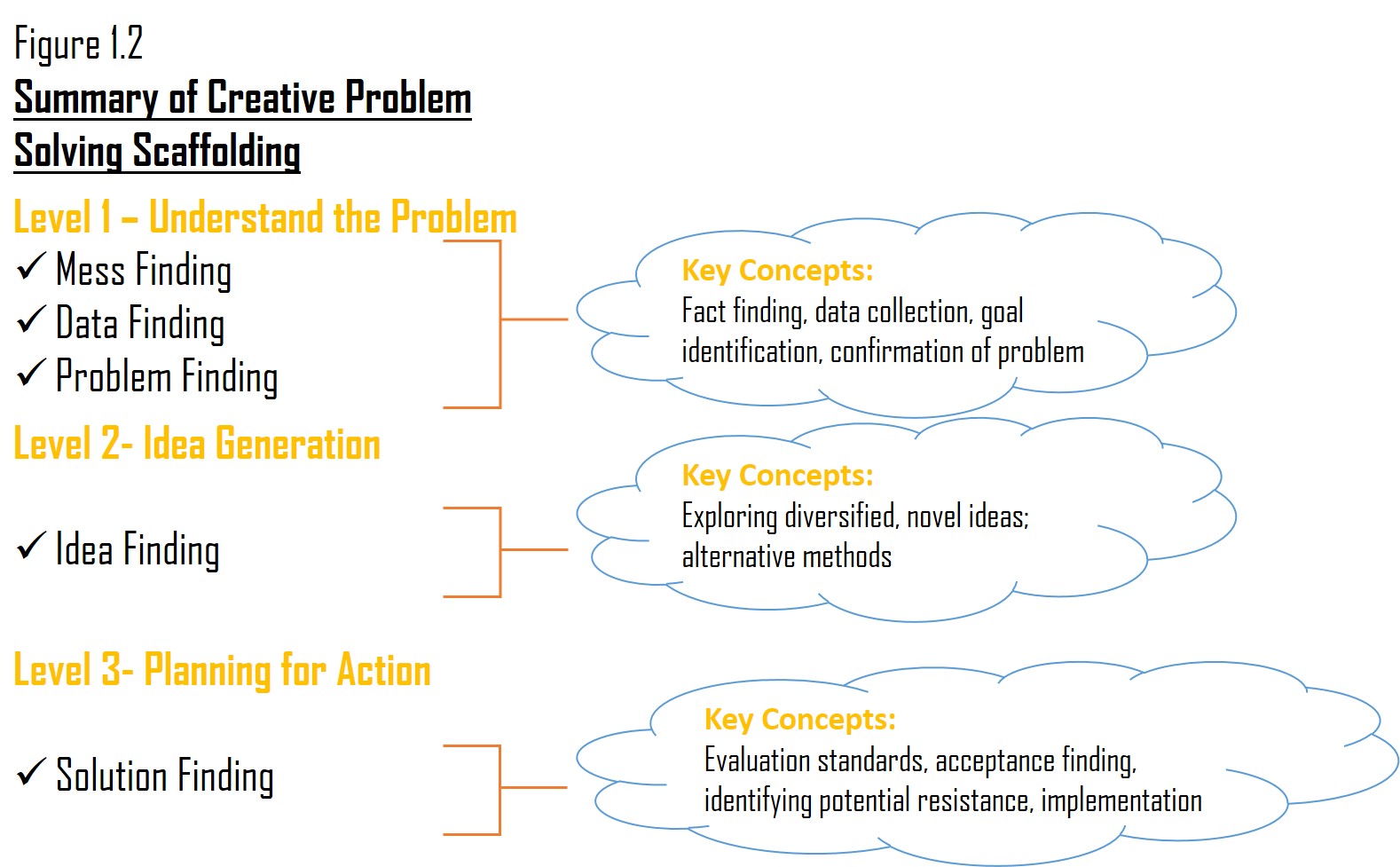

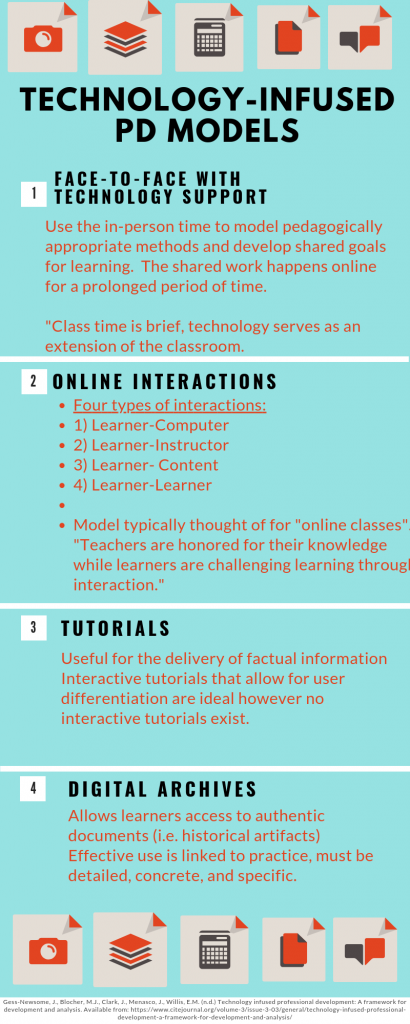

Falling in line with the education best practices as noted by the ISTE standard above and the need for evidence-based practice required for all dietetic professional development, the PD should use technology in a way that allows for modelling adult learning and expose learners to using technology well in a professional setting. Northern Arizona University researchers offers four PD models that utilize technology in different ways as summarized in figure 1.2 below.

While reflecting upon these four models, professional development does not have to be limited to just one. All could be used as part of an on-going development process. However, the one that struck me as most useful for the PD session I am planning would be the face-to-face with technology support. I like the idea that the face-to-face portion isn’t a means to an end but rather the beginning of a longer term conversation. The researchers stressed that the audience engagement shapes the direction of the PD through the development of shared learning goals, (Gess-Newsome, et. al., n.d.). This was a unique way to view the face-to-face model that has been traditionally maintained as PD.

Learning Differentiation.

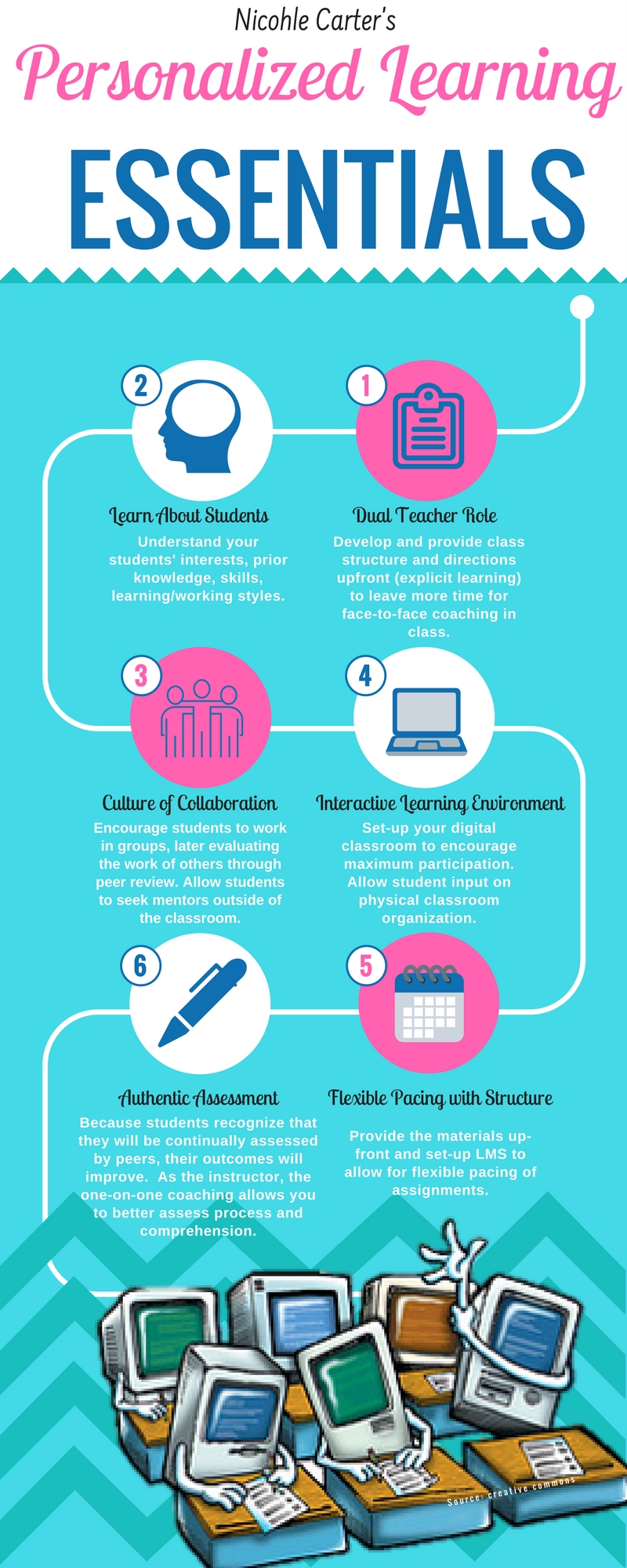

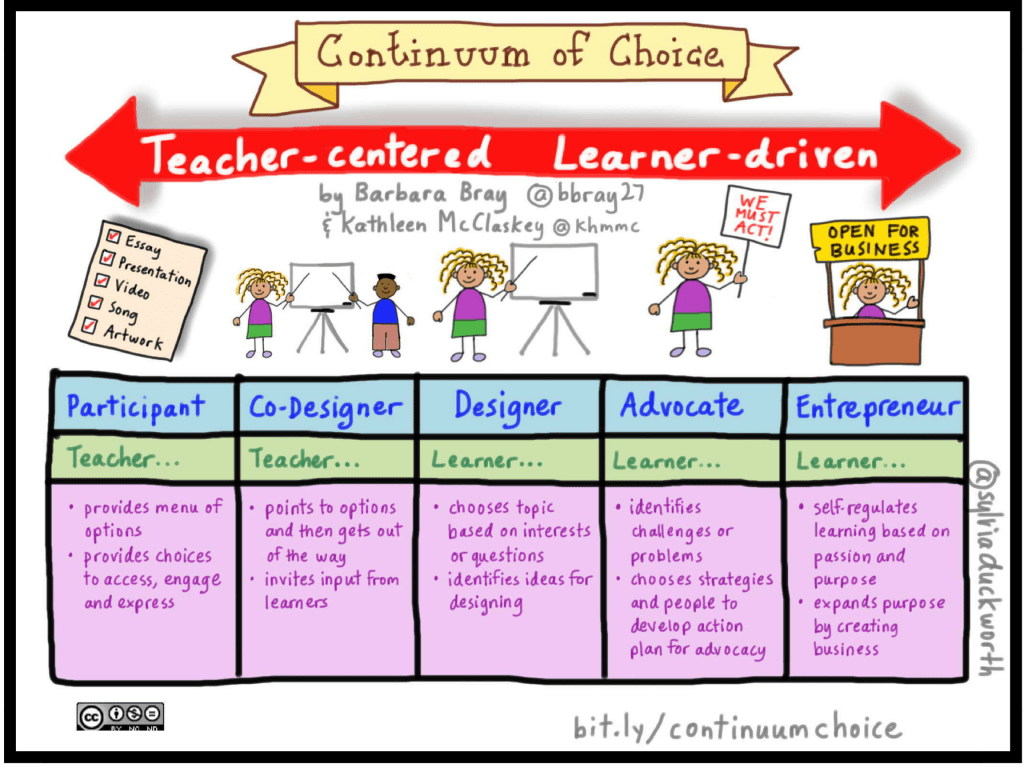

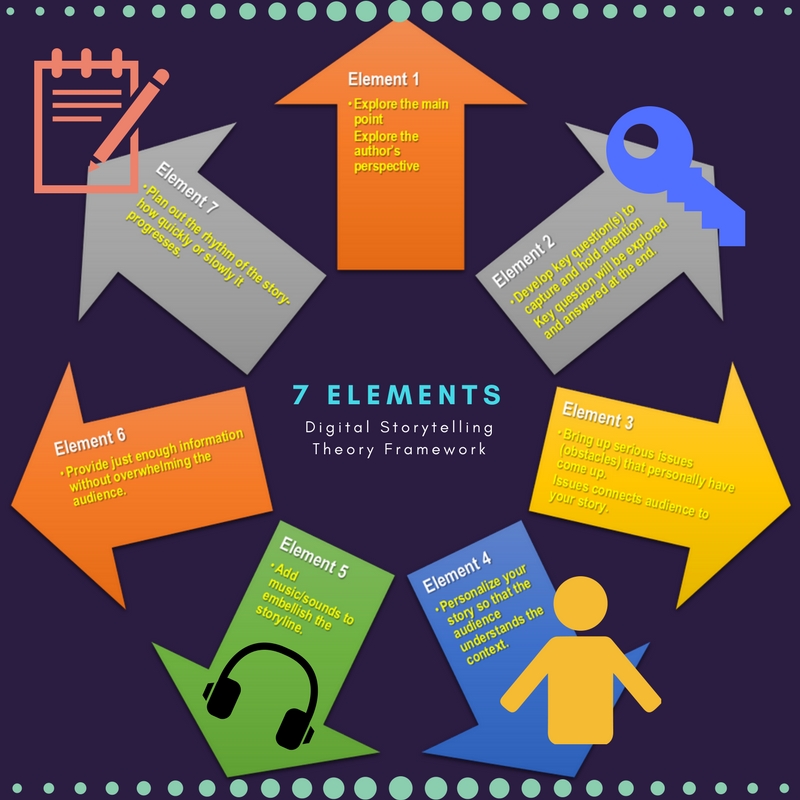

Differentiated learning implies that educators take into consideration individual learning styles and level of readiness prior to designing the lesson plan, (Weselby, 2014). According to Concordia University, there are four ways to incorporate differentiated learning:

1) Content– Though the role of any educator is to ensure that learning outcomes are met, differentiating content implies what learners are able to do with that content by applying Bloom’s taxonomy of thinking skills. Depending on the level of the learner, one learner might be content with simply defining a particular concept while another will strive to create a solution with that same content. Allowing learners to select their level of readiness through content differentiation allows for smoother introduction of the material.

2) Process- In process differentiation, the learners are engaging with the same content but are allowed a choice in the way in which they learn it. Not all learners require the same level of instructor assistance, or require the same materials. Process differentiation also assumes that some learners prefer to learn in groups while other may prefer to learn alone.

3) Product– In this model, the learning outcome is the same but the final product is different.

Learners have the ability to choose how they demonstrate mastery in a particular area through product differentiation.

4) Learning Environment– The learning environment that accommodates different learning needs can be crucial to optimal learning. Flexibility is key for this type of differentiation as learner may want various physical or emotional learning arrangements, (Weselby, 2014).

One of my colleagues suggested that I consider differentiated instruction as a strategy to approach the various technology skill levels of my target audience. I must admit that at first, I wasn’t sure how this could be applied to a conference setting. However, considering the face-to-face technology-infused PD model above, differentiated instruction suddenly became not only plausible but also the more effective method. Differentiated learning aligns with the principles of effective PD by allowing the session to be as learner-centered as possible. Because the learners take more responsibility for their own learning, they become better engaged in the process.

In searching for professional development models that incorporate technology for mixed audiences, I learned that understanding the pillars of good professional development is just as important as applying technology in a relevant mode for everyone to understand. Taking the two factors above into consideration, effective PD for my conference will need both a technology-infused model and the opportunity for differentiated learning.

Resources

Gess-Newsome, J., Blocher, M.J., Clark, J., Menasco, J., Willis, E.M. (n.d.) Technology infused professional development: A framework for development and analysis. Available from: https://www.citejournal.org/volume-3/issue-3-03/general/technology-infused-professional-development-a-framework-for-development-and-analysis/

ISTE, (2017). ISTE standards for coaches. Available from: https://www.iste.org/standards/for-coaches

Weselby, C. (2014). What is differentiated instruction? Examples on how to differentiate instruction in the classroom. Available from: https://education.cu-portland.edu/blog/classroom-resources/examples-of-differentiated-instruction/